Visual Studio Code With Github Copilot

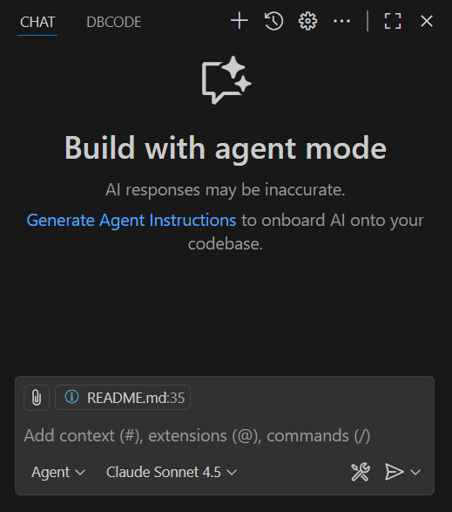

VSCode with Copilot is what I keep coming back to, and it's been improving all the time that I've been using it. Inline code completions is not particularly useful in my opinion (at worst, it's a distraction), but the Agentic AI chat has been very impressive when paired with Anthropic's Claude models.

Recently I've been using Haiku 4.5 for quick boilerplate or 'easy' tasks (it does seem to miss the gist of things sometimes however), Sonnet 4.5 for implementation of features, and occasionally Google's Gemini 2.5 Pro for analysis and planning work. I have dabbled with Opus, but for me the 10x cost is not reflected in the results.

Really it's impressive how well the implementation of features is, often handling for edge cases that I hadn't accounted for in my initial prompts.

That said however, things go much more smoothly when I set up with the following:

Other Agents

Other agents I have used are Cline, Claude Code and Cursor. I have had good results with all, but something important for the practical use of AI agents is protecting yourself from runaway costs that come with the pay-as-you go models implemented by Cline.

It's too easy to spend $50 on a coding session with Cline, and while initially I felt that removing the need for cost efficiency (from the tools perspective) freed up the tool to produce the best code possible, I feel that techniques such as context compression implemented by Copilot have allowed it to close the gap, and having a tool with a fixed cost that I can use everyday without having to switch threads to prevent context length becoming too long, removes mental overhead and stress.

While Cursor implements a fixed price model, the results (for me) have not made it worth switching IDE from the ubiquitous VS Code.

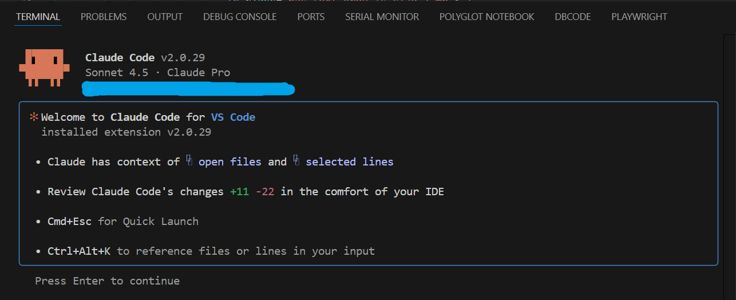

Claude Code performs well and has a usage allowance under the Pro plan, with the Anthropic models being generally regarded as the best models for coding. I feel however that using Claude models via VSCode's Copilot chat feels more co-operative as opposed to just having the agent take control. The visual feed back and ease of navigating files and watching the real-time diffs in VSCode make it much easier to keep up with and monitor the solution the agent is working on.

Of course, VSCode has a built in terminal, through which you can use Claude Code (and Claude gains additional context when ran inside the VSCode hosted terminal). This is sometimes useful for secondary background tasks as a means of running parallel agents.

Environment Configuration

copilot-instructions.md / CLAUDE.md

Set up your workspaces with the markdown file .github/copilot-instructions.md. Copilot will read this file before every task, saving you from having to repeat your self regarding common task completion preferences. The cumulative effect of having a well curated copilot-instructions.md over the course of a thread is game changing.

If you are using other agents such as Claude Code, you might also set up CLAUDE.md, and have that file and copilot-instructions.md direct the AI to read a common prompt file e.g. READ_ME.ai.

Anthropic have published Claude 4 prompt engineering best practices which has useful insight on what you might want to include in this file.

You can put anything in, but I have written under the following headings:

Your Role

From Claude 4 prompt engineering best practices - Add context to improve performance:

Tell the AI agent (Copilot from here on) what you expect of it in a general sense. LLMs are known to perform better when given a specific role and motivation.

Providing context or motivation behind your instructions, such as explaining to Claude why such behavior is important, can help Claude 4 models better understand your goals and deliver more targeted responses.

Further Information

Other information relevant to the model.

Tools

Specify behaviours around tools (e.g. from Anthropic's Claude guide):

After completing a task that involves tool use, provide a quick summary of the work you've done.

If you create any temporary new files, scripts, or helper files for iteration, clean up these files by removing them at the end of the task.

Technology Stack

Make clear the tech stack used in your application e.g.

- Languages

- Frameworks

- Key libraries

- Database

- Dependencies / tooling (e.g. Docker)

- Hosting platform (e.g. AWS / Azure)

Key Source Areas

Highlight areas of your code that you feel are important or that you find agents miss - for example, I highlight that the project maintains a migration scripts directory for database deployment tasks and schema changes.

Build and Debugging

Tell the agent how to run your application in development.

Testing

Tell the agent what tests you want created and maintained and the tools you use (e.g. for E2E testing use Playwright, with scripts located at tests/playwright and highlight MCP server availability).

You might include instructions for logging in with test user accounts for authenticated areas of your application (be careful not to include passwords or access keys in this file).

Language / Locale

Provide information such as - use 'en-GB' locale for spellings and grammar when writing comments and generating content.

Code Style

Describe code style preferences under specific language headings. If you maintain an .editorconfig - refer to this.

Some examples from instructions:

Refer to

.editorconfigfor general code style guidelines.

Use descriptive variable names (e.g.,

catCatcherViewModelforCatCatcherViewModel, notvm). Prefix if multiple instances of the same type are in scope.

Keep view models and data models separate. Repositories should return data models only.

Use of MCP Servers

While Copilot has a lot of tooling built in, to get the best out of your tools, you might look to configure specialised MCP servers.

For example the Playwright MCP server facilitates smoother E2E testing using Playwright, and combined with test instruction in copilot-instructions.md can make Copilot much more independent and require far less manual verification from the developer to complete tasks and resolve bugs.

.vscode/mcp.json

{

"servers": {

"playwright": {

"command": "npx",

"args": [

"@playwright/mcp@latest"

]

}

}

}

Model Selection (Anthropic Claude Models - Haiku, Sonnet, Opus are best for now)

Mine, and I believe the majority of user's best experience has been with Claude AI. Anthropic's approach to developing their model is interesting listening, and I believe they will be at the forefront of LLM development for software engineering for some time to come.

Summary

I hope this has been of use. These are the key insights I have gathered while starting to integrate AI agents into my workflow. I will continue to update this post as I find new useful techniques.